23/25 AI Doctor, For Better Treatment, What You Must Know?

Posted 11 months ago

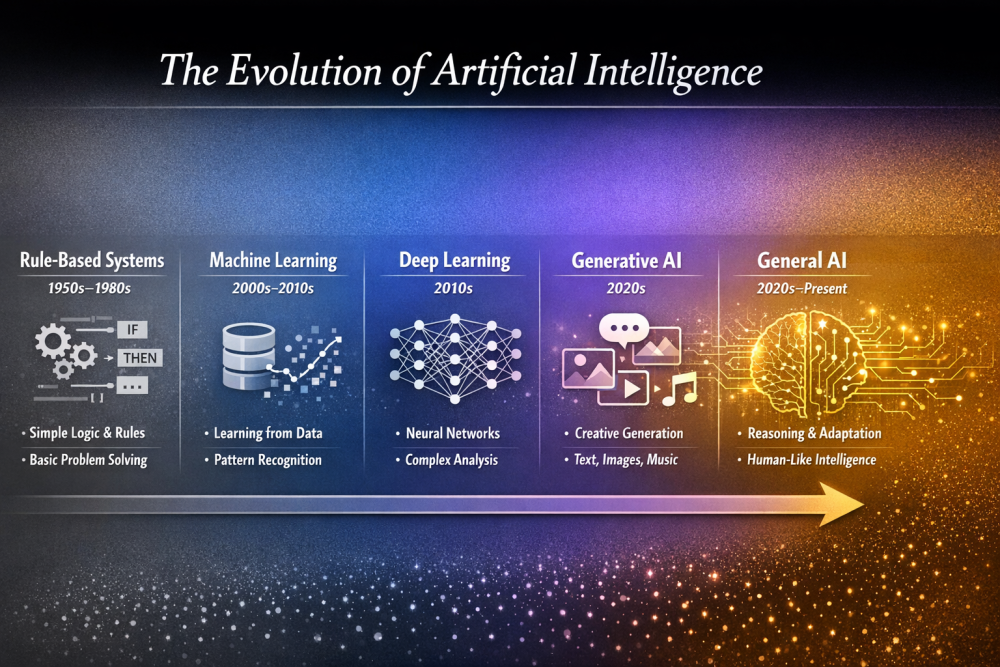

Artificial intelligence (AI) is rapidly penetrating almost every discipline, including how doctors diagnose diseases and make treatment decisions. The advent of AI-driven decision-support systems, with their sophisticated software that analyzes data to offer medical recommendations, presents a promising solution to the overburdened healthcare system. However, before fully embracing this potential, we must carefully consider the implications.

A recent study highlights the challenges of integrating AI into medical decision-making. Researchers from Germany have examined this issue from three key angles: ethics, human behavior, and technology. Their findings remind us that AI's success in medicine depends on much more than just processing power; it requires trust, accountability, and careful design.

The Ethical Dilemma: Who's Responsible When AI Makes a Mistake in Healthcare?

In traditional medical practice, a doctor is responsible for diagnosing and treating a patient. But what happens when AI plays a role in that decision? If an AI system misreads a scan and a patient suffers, who takes the blame: the doctor, the hospital, or the software developer? The study warns that AI can create "responsibility gaps," where no one is accountable when things go wrong. Without clear regulations and ethical guidelines, this could undermine patient trust in AI-assisted care.

Doctors and AI: A Trust Issue

The second major challenge is human behavior, specifically how doctors interact with AI. Studies show that while some physicians hesitate to trust AI recommendations (fearing they will be held responsible for errors), others may rely on them too much, leading to "automation bias." Striking the right balance is critical. Doctors need to trust AI enough to use it effectively, but not so much that they ignore their expertise.

The Technology Factor: Making AI Understandable

AI's biggest weakness is that it often functions as a "black box." Complex machine-learning models can deliver accurate diagnoses, but they rarely explain how they arrived at their conclusions. That's a medical problem where doctors must justify their decisions to patients. While researchers are working to make AI more transparent, this remains a major hurdle to widespread adoption.

The Path Forward: AI as a Partner, Not a Replacement

The key takeaway from this research is that AI should be viewed as a supportive tool, not a replacement for human doctors. Medicine is a delicate balance of empathy, judgment, and data. To integrate AI responsibly, hospitals and policymakers must focus on ethical frameworks, training programs, and technology that enhance and do not undermine doctor-patient relationships. If we approach this cautiously and thoughtfully, AI could be a powerful ally in the fight against disease. However, if we rush or overlook the human element, we risk losing the human touch that defines excellent medicine.