AI Confusion in Mental Health Advice: The Debate Over Google’s Overviews

Posted 1 day ago

31/2026

Mind, one of the largest mental health charities in England and Wales, has launched a pioneering year-long inquiry into how AI is shaping public understanding of mental health, following a Guardian investigation that found Google’s AI-generated summaries sometimes give misleading advice that can adversely affect people's cognitive health.

Mind's inquiry highlights the vital role mental health professionals, policymakers, and tech stakeholders play in safeguarding vulnerable minds amid AI's rapid growth.

Google’s AI Overviews are summary boxes that appear at the top of search results, designed to save time by condensing information on everything from weather forecasts to medical conditions. These summaries reach around 2 billion users each month and are created using generative AI that analyzes online content to provide instant answers.

At first glance, this innovation appears beneficial. However, according to experts at Mind and evidence from The Guardian, the actual situation is more concerning. In multiple test cases, the AI provided incorrect and potentially dangerous advice on serious health issues, including mental health disorders like psychosis and eating disorders. Experts warned it could mislead people in crisis and suffering from cognitive decline.

“There’s a real danger here,” said one Mind specialist. “Instead of guiding users to trusted, nuanced information, these summaries can replace it with oversimplified and misleading content that sounds authoritative, and that can be profoundly harmful when people are already vulnerable.”

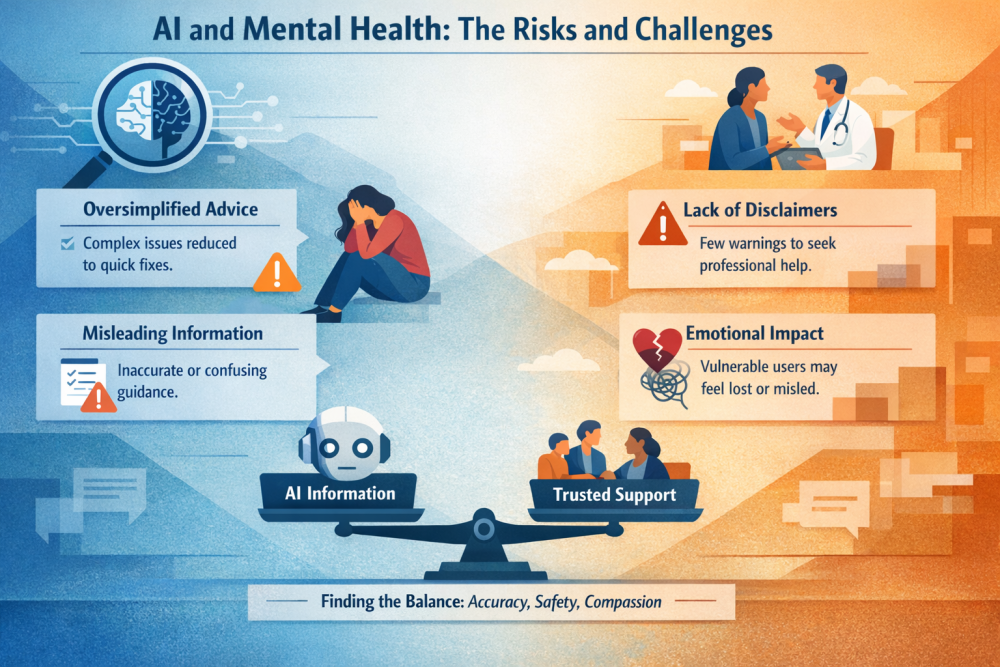

Risk Without Context: What Goes Wrong

Several patterns arise from the criticism:

- Oversimplification: Mental health is complex, and AI summaries often reduce that complexity into short, clear-cut statements. This can remove the nuance needed to understand symptoms, treatment options, and when to seek help.

- Misleading authority: Many users believe that what appears at the top of search results has been verified and is trustworthy. In this case, AI’s confident tone can hide its mistakes, leading people to accept incorrect guidance as fact.

- Downplayed warnings: Separate reporting has shown that Google sometimes buries disclaimers about the limitations of its AI, meaning users may not see a clear message encouraging them to consult health professionals.

For someone struggling with anxiety, an eating disorder, or thoughts of self-harm, these nuances are far from trivial and can determine whether they seek proper help or spiral into confusion or doubt.

The Inquiry: What’s at Stake

Mind’s year-long commission aims to unite mental health professionals, people with lived experience, policy experts, and tech company representatives to examine how AI is currently used and how it should be used in mental health information.

The goal isn’t to condemn AI entirely. In fact, supporters acknowledge the significant potential of artificial intelligence to expand access to support and knowledge, especially in areas with limited mental health resources. However, charity leaders emphasize that innovation must be linked to safety, accuracy, and empathy, not just speed and convenience.

“People deserve information that is safe, accurate, and grounded in evidence, not untested technology presented with a veneer of confidence,” said Mind’s chief executive.

The controversy surrounding Google’s AI Overviews is part of a larger debate about how artificial intelligence relates to public health, particularly mental health. Experts outside this specific case have also expressed concerns that AI may misinterpret emotional cues, reinforce biases, or even provide misleading psychological advice when users seek help.

Both risks and benefits hinge on how well these systems are tested, monitored, and regulated, emphasizing the importance of the audience's role in shaping trustworthy AI.

Looking Ahead: What Needs to Take Place

Mind’s inquiry could establish the foundation for stronger safety standards, clearer regulatory guidance, and collaborative frameworks that ensure AI tools support rather than hinder public understanding of mental health. Advocates are calling for:

- Transparent algorithms and safeguards that make clear how AI reaches its conclusions.

- Stronger visibility of disclaimers that urge users to verify information with medical professionals.

- Human oversight at every stage of health-related AI development.

As artificial intelligence advances, so must the ways we safeguard citizens’ health, especially those who are most vulnerable. The current debate isn’t just about technology; it’s about trust, ethics, and the responsibility of tech giants whose influence reaches into the most personal aspects of our lives.

Orginal Source for the Above Article

1. Mind launches inquiry into AI and mental health after Guardian investigation

2. ‘Very dangerous’: a Mind mental health expert on Google’s AI Overviews