227/25 AI, Academia, and the Question of Sustainability

Posted 2 months ago

🚨 Big Picture

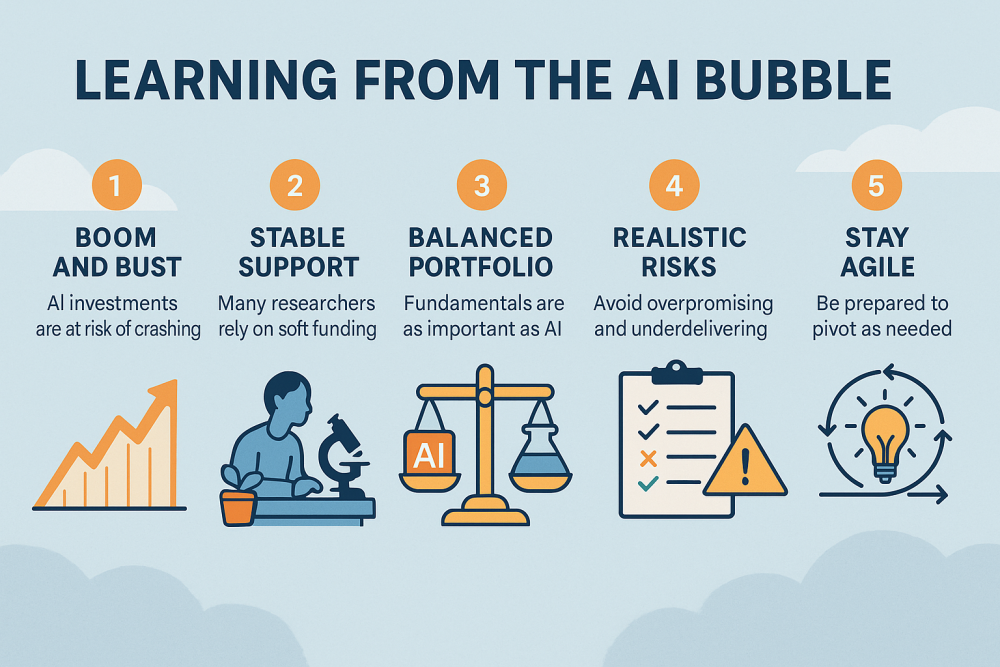

We are currently witnessing an extraordinary surge in the development and deployment of artificial intelligence technologies, fueled by unprecedented financial investment, widespread enthusiasm, and a rapidly expanding start-up ecosystem. However, an increasing number of experts are now cautioning that this momentum may reflect a speculative bubble that could ultimately collapse. A recent article critically examines the potential consequences of such a downturn for the trajectory of scientific research worldwide.

🔍 What’s going on?

- A flood of investment and attention is flowing into AI: from startups promising “the next big thing” to major companies building data centers and models. But not all of it is backed by proven results.

- Analysts are pointing to warning signs: lofty valuations, an avalanche of new AI projects, many of them early stage or speculative. The article calls this the “AI bubble”.

📉 If the bubble bursts — what could happen?

The article outlines several potential knock-on effects that would ripple into the world of research and science:

- Funding freezes and job cuts

If companies and investors pull back money after a crash, research labs that rely on soft funding for AI-related work might struggle. Grants could decrease, and hiring may pause. - Start-ups vanish

Many small AI companies ride the hype wave. If the wave crashes, many could struggle, which means the research, prototypes, and innovations they were expected to produce might vanish or be delayed. - Redirect-research risk

Programs and labs that have shifted heavily toward “AI applied to everything” might see their niche shrink. The excitement could move to other fields, leaving some recent projects behind. - Long-term trust erosion

If big promises don’t come true, public, academic, and investor confidence in AI research, particularly applied research, might decline. This could slow down future collaboration, regulatory support, and the adoption of new tools.

🎓 Serious Passage for academics/labs

The following are the essential considerations while adopting AI into the university's governance systems:

- Institutions should avoid over-committing to the idea that “everything must now be AI.” While AI has real capabilities, not every problem is best solved with it, and funders might become more cautious.

- Diversify research portfolios: maintaining strength in foundational science, translational work, and other methodologies beyond just “AI-driven" approaches might offer better long-term resilience.

- Monitor funding signals: if investor sentiment shifts away from high-risk AI ventures, there may be opportunities to pivot or acquire repositioned funds.

- Emphasize clear metrics and realistic deliverables: in periods of hype, bold claims fly, but when the boom ends, rigorous, transparent results will matter even more.

- Enhance interdisciplinarity and real-world linkage: research that shows concrete benefit, societal impact, and a straightforward path to implementation may fare better if general enthusiasm recedes.

💡 Bottom line

In short, the surge in AI is exciting; it holds real promise. But the article warns that the bigger the hype, the greater the risk of a fall. If the bubble does burst, the ripple will be felt not just in tech companies and stock markets, but also in university labs, research programs, and the rhythm of science itself.

For institutions like yours, which bridge technology, skills, and applied research, this is a moment to pause and plan: ride the wave, yes — but build so that you’re not swept away if the wave breaks.